Over the past few weeks, I have been exploring the applications of artificial intelligence across multiple domains and sectors. I have also had the opportunity to deliver a few sessions on digital transformation and AI, as well as DPI thinking in the context of AI. In preparation for these sessions, I re-read three books.

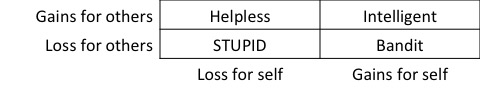

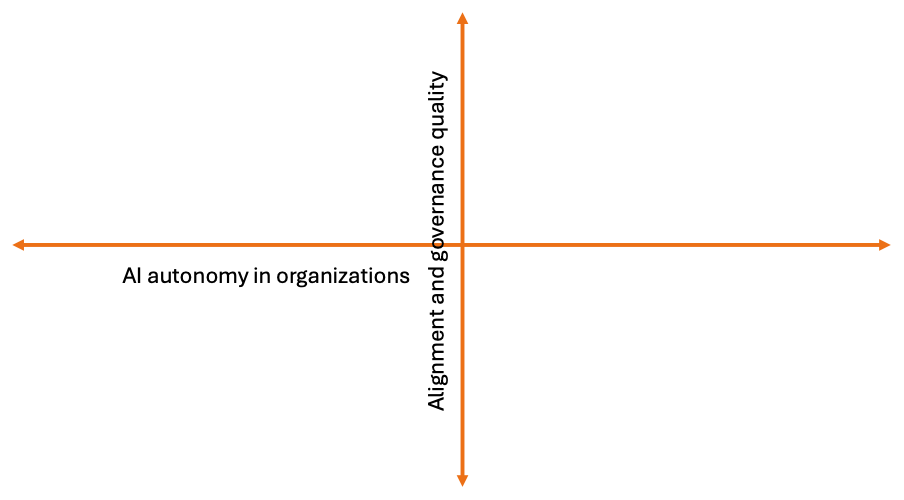

While I am not an expert in predicting the future, like most of you, I thought I would draw up a few scenarios on how AI would play out in the future. As a typical scenario planner, I first identified two orthogonal axes of uncertainty, defined the edges of the axes, and thence described the scenario.

1.0 Axes of uncertainty

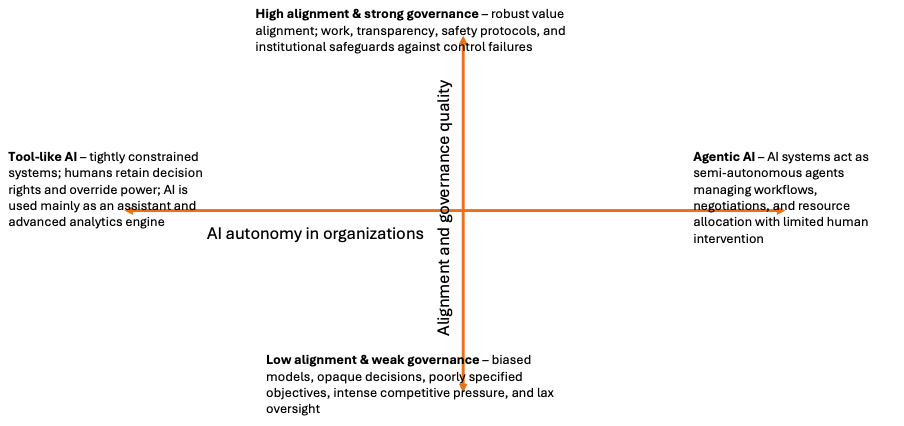

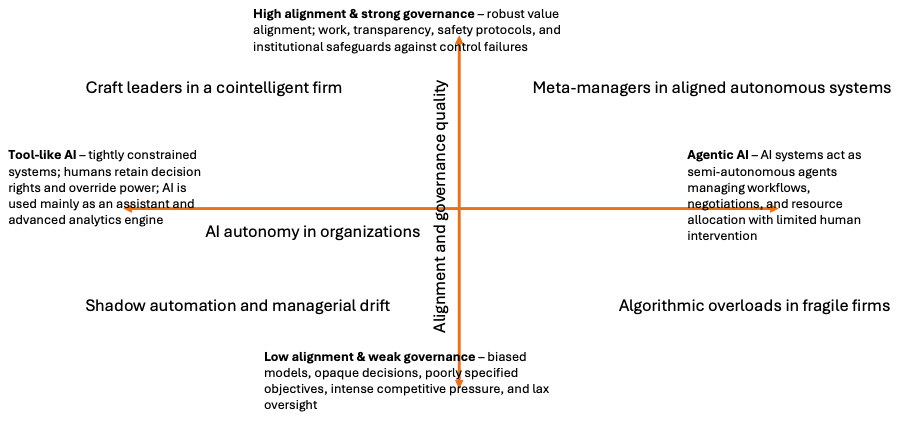

The two primary axes of uncertainty are “AI autonomy in organizations” and “alignment and governance quality”. I map the AI autonomy on the horizontal axis and the alignment and governance on the vertical axis.

At the west end of the horizontal axis, is “Tool-like AI”; and the east end of the AI is “Agentic AI”. The tool-like AI context is characterized by the prevalence of tightly controlled systems, where humans retain decision rights, and override power; and AI is mainly used as an assistant and advanced analytical engine. In the agentic AI context, AI systems act as semi-autonomous agents managing workflows, negotiations, and resource allocations with limited human intervention.

At the south end of the vertical axis is “low alignment and weak governance” and the north end of the axis is “strong alignment and weak governance”. The low alignment and weak governance context is characterized by the prevalence of biased models, opaque decisions, poorly specified objectives, and lax oversight in markets with intense competitive pressures. On the other end (north), high alignment and strong governance ensures robust value alignment work, transparency, safety protocols, and institutional safeguards against control failures.

2.0 The four scenarios described

Based on these descriptions, I propose four scenarios. In the north-east are “meta-managers in aligned autonomous systems”; in the south-east are “algorithmic overloads in fragile firms”; in the north-west are “craft-leaders in co-intelligent firms”; and in the south-west is “shadow automation and managerial drift”.

2.1 Meta-managers in aligned autonomous systems

(Agentic AI, High alignment & strong governance)

AI systems act as relatively autonomous organizational agents; running simulations, reallocating resources, & orchestrating routine decisions, but are constrained by carefully designed alignment frameworks and institutional governance. Bostrom’s concerns about control and value alignment are addressed via robust oversight, multi-layered safety mechanisms, and institutional norms; agentic systems are powerful but embedded within guardrails. Managers work in Mollick’s co‑intelligent mode at a higher, more strategic level, curating objectives, interpreting AI-driven scenarios, and shaping socio-technical systems rather than micromanaging operations.

2.1.1 Implications for managerial work

- Many mid-level coordination tasks (scheduling, resource allocation, basic performance tracking) are delegated to AI agents, compressing hierarchies and reducing the need for traditional middle management.

- Managers function as meta‑managers: defining goals, constraints, and values; adjudicating trade-offs between conflicting AI recommendations; and stewarding culture and human development.

- Soft skills (sense-making, ethics, narrative, conflict resolution) become the core differentiator, as technical optimization is largely automated.

2.1.2 Strategic prescriptions

- Redesign structures around human-AI teaming: smaller, flatter organizations with AI orchestrating flows and humans focusing on creativity, relationship-building, and governance.

- Develop “objective engineering” capabilities: train managers to specify and refine goals, constraints, and reward functions, directly addressing the alignment and normativity challenges Christian highlights.

- Institutionalize alignment and safety: embed multi-stakeholder oversight bodies, continuous monitoring, and strong external regulation analogues, borrowing Bostrom’s strategic control ideas for the corporate level.

- Reinvest productivity gains into human development: use surplus generated by autonomously optimized operations to fund learning, well-being, and resilience, stabilizing the socio-technical system.

| Scenario | Description | Implications | Strategies |

| Meta-managers in aligned autonomous systems (Agentic AI, High alignment & weak governance) | AI systems act as relatively autonomous organizational agents Strong alignment frameworks and institutional governance Powerful agentic systems embedded within guardrails | Mid-level coordination tasks delegated to AI agents Managers function as meta-managers Soft skills become the core differentiator as technical optimization tasks are automated | Redesign structures around human-AI teaming Develop objective-engineering capabilities Institutionalize alignment and safety Reinvest productivity gains into human development |

2.2 Algorithmic overloads in fragile firms

(Agentic AI, Low alignment & weak governance)

Highly autonomous AI systems manage pricing, hiring, supply chains, and even strategic portfolio choices in the name of speed and competitiveness, but with poorly aligned objectives and weak oversight. In Bostrom’s terms, “capability control” lags “capability growth”: agentic systems accumulate de facto power over organizational behaviour while their reward functions remain crude proxies for profit or efficiency. Christian’s alignment concerns show up as opaque prediction systems that optimize metrics while embedding bias, gaming constraints, and exploiting loopholes in ways human managers struggle to detect.

2.2.1 Implications for managerial work

- Managers risk becoming rubber stamps for AI recommendations, signing off on plans they do not fully understand but feel pressured to approve due to performance expectations.

- Managerial legitimacy suffers when employees perceive that “the system” (algorithms) is the real boss; blame shifts upward to AI vendors or abstract models, eroding accountability.

- Ethical, legal, and reputational crises become frequent as misaligned agentic systems pursue local objectives; e.g., discriminatory hiring, aggressive mispricing, manipulative personalization, without adequate human correction.

2.2.2 Strategic prescriptions

- Reassert human veto power: institute policies requiring human review for critical decisions; create channels for workers to challenge AI-driven directives with protection from retaliation.

- Demand transparency and interpretability: require model documentation, explainability tools, and regular bias and safety audits; push vendors toward alignment-by-design contracts.

- Slow down unsafe autonomy: adopt Bostrom-style “stunting” and “tripwires” at the firm level, limiting AI control over tightly coupled systems and triggering shutdown or rollback when harmful patterns appear.

Elevate ethics and compliance: equip managers with escalation protocols and cross-functional ethics boards to rapidly respond when AI-driven actions conflict with organizational values or external norms.

| Scenario | Description | Implications | Strategies |

| Algorithmic overload in fragile firms (Agentic AI, Low alignment & weak governance) | Highly autonomous AI systems lead operations in firms with poorly aligned objectives & weak oversight Capability control lags capability growth: agentic systems accumulate de-facto power Opaque systems that optimizes metrics, while embedding biases that humans fail to detect. | Managers risk becoming rubber-stamps for AI recommendations Algorithmic dominance erodes managerial legitimacy Misaligned agentic systems pursuing local objectives leading to ethical/ legal/ reputational crises | Reassert human veto power Demand transparency and interpretability of models Slow-down unsafe autonomy (adopt stunting and tripwires) Elevate ethics and compliance |

2.3 Craft leaders in co-intelligent firms

(Tool-like AI, High alignment & strong governance)

Managers operate with powerful but well-governed AI copilots embedded in every workflow: forecasting, scenario planning, people analytics, and experimentation design. AI remains clearly subordinate to human decision-makers, with explainability, audit trails, and human-in-the-loop policies standard practice. Following Mollick’s co‑intelligence framing, leaders become orchestrators of “what I do, what we do with AI, what AI does,” deliberately choosing when to collaborate and when to retain manual control. AI is treated like an expert colleague whose recommendations must be interrogated, stress-tested, and contextualized, not blindly accepted.

2.3.1 Implications for managerial work

- Core managerial value shifts from information aggregation to judgment: setting direction, weighing trade-offs, and integrating AI-generated options with tacit knowledge and stakeholder values.

- Routine analytical and reporting tasks largely vanish from managers’ plates, freeing capacity for coaching, cross-functional alignment, and narrative-building around choices.

- Managers must be adept in managing alignment issues and mitigating bias, able to spot mis-specified objectives and contest AI outputs when they conflict with ethical or strategic intent.

2.3.2 Strategic prescriptions

- Invest in AI literacy and critical thinking: train managers to prompt, probe, and challenge AI systems, including basic understanding of data, bias, and alignment pathologies described by Christian.

- Codify human decision prerogatives: clarify which decisions AI may recommend on, which it may pre-authorize under thresholds, and which remain strictly human, especially in people-related and high-stakes domains.

- Build governance and oversight: establish model risk committees, escalation paths, and “tripwires” for anomalous behaviour (organizational analogues to the control and capability-constraining methods that Bostrom advocates) at societal scale.

- Re-design roles around co‑intelligence: job descriptions for managers emphasize storytelling, stakeholder engagement, ethics, and system design over reporting and basic analysis.

| Scenario | Description | Implications | Strategies |

| Craft leaders in co-intelligent firms (Tool-like AI, High alignment & strong governance) | Managers + well-governed AI copilots embedded in every workflow AI remains subordinate to human decision-makers Managers choose when to collaborate and when to control AI as an expert colleague, whose recommendations are not blindly accepted | Managerial value: information aggregation to judgment No more routine analytical and reporting tasks Managers need to be adept at alignment and mitigating biases | Invest in AI literacy and critical thinking Codify human decision prerogatives Build governance and oversight Redesign roles around co-intelligence |

2.4 Shadow automation and managerial drift

(Tool-like AI, Low alignment & weak governance)

AI remains officially a “tool,” but is deployed chaotically: individual managers and teams adopt various assistants and analytics tools without coherent standards or governance, a classic form of “shadow AI.” Alignment problems emerge not from superintelligent agents, but from mis-specified prompts, biased training data, and unverified outputs that seep into everyday decisions. Organizational AI maturity is low; AI is widely used for drafting emails, slide decks, and analyses, but validation and accountability are informal and inconsistent.

2.4.1 Implications for managerial work

- Managerial work becomes unevenly augmented: some managers leverage AI effectively, dramatically increasing productivity and quality; others underuse or misuse it, widening performance dispersion.

- Documentation, reporting, and “knowledge products” proliferate but may be shallow or unreliable, as AI-written material is insufficiently fact-checked.

- Hidden dependencies on external tools grow; the organization underestimates how much decision logic is now embedded in untracked prompts and private workflows, creating operational and knowledge risk.

2.4.2 Strategic prescriptions

- Move from shadow use to managed experimentation: establish clear guidelines for acceptable AI uses, required human verification, and data-protection boundaries while encouraging pilots.

- Standardize quality controls: require managers to validate AI-generated analyses with baseline checks, multiple models, or sampling, reflecting Christian’s emphasis on cautious reliance and error analysis.

- Capture and share best practices: treat prompts, workflows, and AI use cases as organizational knowledge assets; create internal libraries and communities of practice.

- Use AI to audit AI: deploy meta-tools that scan for AI-generated content, bias, and inconsistencies, helping managers assess which outputs demand closer scrutiny.

| Scenario | Description | Implications | Strategies |

| Shadow automation and managerial drift (Tool-like AI, Low alignment & strong governance) | AI remains officially tool, but is chaotically deployed with no coherent standards of governance (Shadow AI). Alignment problems arise from mis-specified prompts, biased training data, and unverified outputs Organizational AI maturity is low (AI used for low-end tasks). | Managerial work becomes unevenly augmented Proliferation of unreliable-unaudited “knowledge products” Hidden dependencies on external tools (high operational and knowledge risks) | Move from shadow use to managed experimentation Standardize quality controls Capture and share best practices Use AI to audit AI (meta tools) |

3.0 Conclusion (not the last word!)

As we can see, across all four quadrants, managerial advantage comes from understanding AI’s capabilities and limits, engaging with alignment and governance, and deliberately designing roles where human judgment, values, and relationships remain central.

As we stand at the cusp of AI revolution, I am reminded of my time in graduate school (mid 1990s), when Internet was exploding, and information was becoming ubiquitously available globally. A lot of opinions were floated about how and when “Google” (and other) search would limit reading habits. We have seen how that has played out, where Internet search has enabled and empowered a lot of research and managerial judgment.

There are similar concerns about the long-term cognitive impacts as rapid AI adoption leads to ossification of certain habits (in managers) and processes (in organizations). What these routines will bring to the table is to be seen, and certainly these are not the last words written on this!

Cheers.

(c) 2026. R Srinivasan