What is artificial in AI?

As the four-day long weekend loomed and I was closing an executive education programme where the focus was digitalization and technology, especially in the context of India and emerging economies, I read this piece on AI ethics by IIMB alumnus Dayasindhu. He talks about the differences between teleological and deontological perspectives of AI and ethics. It got me thinking on technological unemployment (unemployment caused by the firms’ adoption of technologies such as AI and Robotics). For those of you interested in a little bit of history, read this piece (also by Dayasindhu) on how India (especially Indian banking industry) had adopted technology.

In my classes on digital transformation, I introduce the potential of Artificial Intelligence (AI) and its implications on work and skills. My students (in India and Germany) and Executive Education participants would remember these discussions. One of my favourite conversations have been about what kinds of jobs will get disrupted thanks to AI and robotics. I argue that, contrary to popular wisdom, we would have robots washing our clothes, much earlier than those folding the laundry. While washing clothes is a simple operation (for robots), folding laundry requires a very complex calculation of identifying different clothes of irregular shapes, fabric and weight (Read more here). And that, most robots we have are single use – made for a specific purpose, as compared to a human arm, that is truly multi-purpose (Read more here). Yes, there have been great advancements on these two fronts, but the challenge still remains – AI has progressed far more in certain skills that seem very complex for humans, whereas robots struggle to perform certain tasks that seem very easy to humans, like riding a bicycle (which a four-year old child can possibly do with relative ease). The explanation lies in the Moravec’s Paradox. Hans Moravec and others had articulated this in the 1980s!

What is

Moravec’s Paradox?

“It is comparatively easy to make computers exhibit adult level performance on intelligence tests or playing checkers, and difficult or impossible to give them the skills of a one-year-old when it comes to perception and mobility”.

Moravec, 1988

It is very difficult to reverse engineer certain human skills that are unconscious. It is easier to reverse engineer motor processes (think factory automation), cognitive skills (think big data analytics), or routinised computations (think predictive/ prescriptive algorithms).

“In general, we’re less aware of what our minds do best…. We’re more aware of simple processes that don’t work well than of complex ones that work flawlessly”.

Minsky, 1986

Moravec’s

paradox proposes that this distinction has its roots in evolution. As a

species, we have spent millions of years in selection, mutation, and retention

of specific skills that has allowed us to survive and succeed in this world.

Some examples of such skills include learning a language, sensory-motor skills

like riding a bicycle, and drawing basic art.

What are

the challenges?

Based on my

reading and experience, I envisage five major challenges for AI and robotics in

the days to come.

One, artificially intelligent machines need to be trained to learn languages. Yes, there have been great advances in natural language processing (NLP) that have contributed to voice recognition and responses. However, there are still gaps in how machines interpret sarcasm and figures of speech. Couple of years ago, a man tweeted to an airline about his misplaced luggage in a sarcastic tone, and the customer service bot responded with thanks, much to the amusement of many social media users. NLP involves the ability to read, decipher, understand and make sense of natural language. Codifying grammar in complex languages like English, accentuated by differences in accent can make deciphering spoken language difficult for machines. Add to it, contextually significant figures of speech and idioms – what do you expect computers to understand when you say, “the old man down the street kicked the bucket”?

Two, apart from communication, machine to man communication is tricky. We can industrial “pick-and-place” robots in industrial contexts; can we have “give-and-take” robots in customer service settings? Imagine a food serving robot in a fine dining restaurant … how do we train the robot to read the moods and suggest the right cuisine and music to suit the occasion? Most of the robots that we have as I write this exhibit puppy-like behaviour, a far cry from naturally intelligent human beings. Humans need friendliness, understanding, and empathy in their social interactions, which are very complex to programme.

Three, there have been a lot of advances in environmental awareness and responses. Self-navigation and communication has significantly improved thanks to technologies like Simultaneously Localisation and Mapping (or SLAM), we are able to visually and sensorily improve augmented reality (AR) experiences. Still, the risks of having human beings in the midst of a robot swarm is fraught with a variety of risks. Not just that different robots need to sense and respond to the location and movement of other robots, they need to respond to “unpredictable” movements and responses of humans. When presented with a danger, different humans respond differently based on their psychologies and personalities, most often, shaped from a series of prior experiences and perceived self-efficacies. Robots still find it difficult to sense, characterise, and respond to such interactions. Today’s social robots are designed for short interactions with humans, not learning social and moral norms leading to sustained long term relationships.

Four, developing multi-functional robots that can develop reasoning. Reasoning is ability to interpret something in a logical way in order to form a conclusion or judgment. For instance, it is easy for a robot to pick up a screwdriver from a bin, but quite something else to be able to pick it up in the right orientation and be able to use it appropriately. It needs to be programmed to realise when the tool is held in the wrong orientation and be able to self-correct it to the right orientation for optimal use.

Five, even when we can train the robot with a variety of sensors to develop logical reasoning through detailed pattern-evaluations and algorithms, it would be difficult to train it to make judgments. For instance, to make up what is good or evil. Check out MIT’s Moral Machine here. Apart from developing the morality in the machine, how can we programme it to be not just consistent in behaviour; but remain fair and use appropriate criteria for decision-making. Imagine a table-cleaning robot that knows where to leave the cloth when someone rings the doorbell. It needs to be programmed to understand when to stop an activity and when to start another. Given the variety of contexts humans engage with on a daily basis, what they learn naturally will surely take complex programming.

Data

privacy, security and accountability

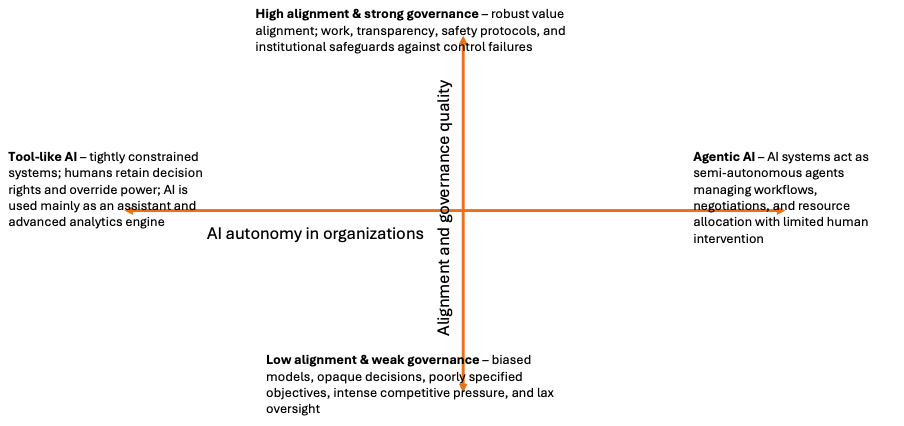

Add to all

these, issues around data privacy and security. Given that we need to provide

the robot and AI systems with enough data about humans and we have limited

ability to programme the system, issues about privacy is critical. Consent is the

key word in privacy, but when we are driving in the midst of an autonomous

vehicle (AV), there is so much data the AV collects to navigate, we need strong

governance and accountability. When an AV is involved in an accident with a pedestrian,

who is accountable – the emergency driver in the AV; the programmer of the AV;

the manufacturer of the vehicle; any of the hardware manufacturers, like the

camera/ sensors that did not do their jobs properly; or the cloud service

provider which did not respond soon enough for the AV to save lives? Such

questions are pertinent and are too important to relegate to a later date when

they occur, post facto.

AI induced technological

unemployment

At the end

of all these conversations, when I look around me, I see three kinds of jobs

being lost to technological change: a) low-end white collared jobs, like

accountants and clerks; b) low-skilled data analysts, like the ones at a

pathology interpreting a clinical report or a law-apprentice doing contract

reviews; and c) hazardous-monotonous or random-exceptional work, like

monitoring a volcano’s activity or seismic measurements for earthquakes.

The

traditional blue-collared jobs like factory workers, bus conductors/ drivers,

repair and refurbishment mechanics, capital machinery installation, agricultural

field workers, and housekeeping staff would take a long time to be lost to AI/

robotics. Primarily because these jobs are heavily unpredictable, secondly as

these jobs involve significant judgment and reasoning, and thirdly because the

costs of automating these jobs would possibly far outweigh the benefits (due to

low labor costs and high coordination costs). Not all blue-collared jobs are

safe, though. Take for instance staff at warehouses – with pick and place

robots, automatic forklifts, and technologies like RFID sensors, a lot of jobs

could be lost. Especially, when quick response is the source of competitive advantage

in the warehousing operations, automation will greatly reduce errors and increase

reliability or operations.

As Brian Reese wrote in the book, The Fourth Age: Smart Robots, Conscious Computers, and the Future of Humanity, “order takers at fast food places may be replaced by machines, but the people who clean-up the restaurant at night won’t be. The jobs that automation affects will be spread throughout the wage spectrum.”

In summary, in order to understand the nature and quantity of technological unemployment (job losses due to AI and robotics), we need to ask three questions – is the task codifiable? (on an average, tasks that the human race have learnt in the past few decades are the easiest to codify); is it possible to reverse-engineer it? (can we get to break the task into smaller tasks); and does the task lend itself to a series of decision rules? (can we create a comprehensive set of decision rules, that could be programmed into neat decision trees or matrices). If you answered in the affirmative (yes) to these questions with reference to your job/ task, go learn something new and look for another job!

Cheers.

© 2019. R

Srinivasan, IIM Bangalore